Building scalable microservices with Kubernetes helps you deploy, manage, and scale applications efficiently. This article covers the key steps and practices to ensure your microservices architecture is robust and high-performing.

Understanding Microservices and Kubernetes

Microservices architecture breaks applications into smaller, self-contained units that can be independently deployed and scaled. This approach contrasts with monolithic architectural style, where all components are tightly coupled, making updates and scaling more complex. In a microservices architecture, small, loosely coupled services can be deployed and scaled individually, allowing for greater flexibility and resilience.

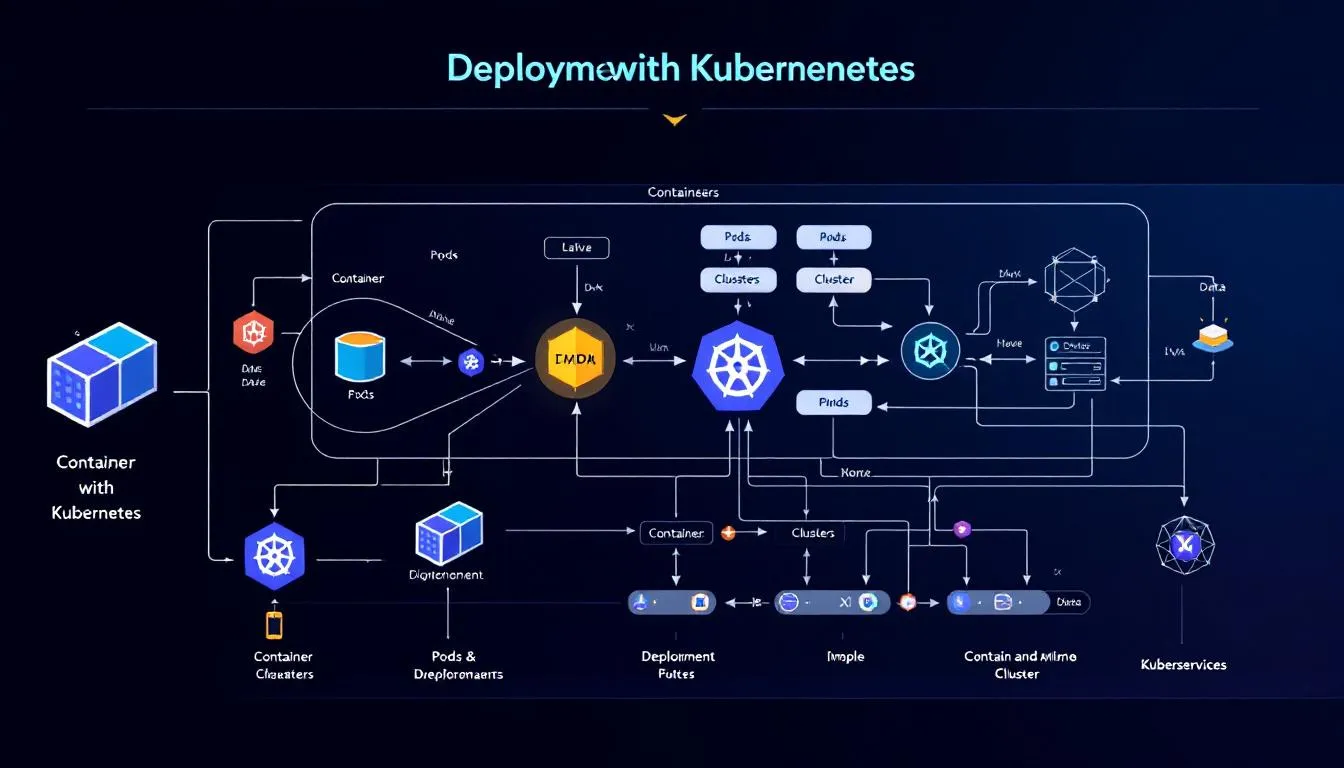

Kubernetes is an open-source container orchestration platform designed to deploy and manage microservices efficiently. It automates deployment, scaling, and operation of containerized applications, reducing the manual effort involved in managing microservices. Google Kubernetes Engine ensures that your microservices maintain their desired state, even when failures occur.

A Kubernetes cluster consists of a control plane that manages workloads and worker nodes where application containers run. The smallest deployable unit in Kubernetes is a pod, which houses one or more containers of a microservice. This architecture allows for flexible deployment strategies, including rolling updates and blue-green deployments, to minimize downtime and ensure continuous availability.

Understanding these fundamental concepts is crucial for effectively managing microservices with Kubernetes. Leveraging Kubernetes’ capabilities enables the creation of a scalable, resilient, and efficient microservices architecture.

Benefits of Using Kubernetes for Scalable Microservices

Kubernetes simplifies deploying and running distributed applications at scale, making it an ideal choice for modern microservices architectures. One of the key benefits is its ability to dynamically adjust resources based on usage, preventing over-provisioning and allowing for efficient resource management and cost-efficient scaling. During high traffic periods, Kubernetes can scale out by adding more pods to handle the increased demand, ensuring that your applications remain performant.

Kubernetes offers several key features for APIs built on microservices:

- Allows APIs to be scaled independently, accommodating changes in load without affecting overall performance.

- Uses components such as ReplicaSets to maintain application availability by automatically replacing failed pods.

- Ensures high availability and resilience.

- Provides orchestration capabilities valuable for resource-intensive core API functionalities, ensuring continuous operation even under varying loads.

Kubernetes enhances DevOps efficiency by:

- Simplifying the process of integrating containers and accessing storage across different cloud platforms.

- Facilitating easy migration of containerized applications between on-premises and various cloud infrastructures, ensuring uninterrupted service.

- Helping achieve a scalable microservices architecture that is cost-effective and highly available.

Setting Up Your Kubernetes Cluster

Kubernetes can be set up on local machines, private data centers, or cloud environments, offering flexibility in deployment options. For beginners, using tools supported by the Kubernetes community is recommended for local setups. Kubeadm is the official tool recommended for deploying Kubernetes clusters, providing a straightforward way to set up and configure your cluster.

When setting up a Kubernetes cluster, it is advisable to run Kubernetes components as container images within the cluster whenever possible to build container images. This approach ensures consistency and simplifies the management of the control plane. Additionally, selecting a suitable container runtime is necessary for the deployment process. Kubernetes is primarily designed to run its control plane on Linux systems, so ensure that your environment aligns with these requirements.

Setting up your Kubernetes cluster is the first step in deploying and managing microservices with Kubernetes. Following best practices and using the right tools creates a robust and scalable environment for your microservices.

Deploying Microservices with Kubernetes

The deployment process involves the following steps:

- Packaging microservices into container images using Dockerfiles, which define the required environment and dependencies.

- Using these container images as the building blocks for deploying microservices in Kubernetes.

- Defining Kubernetes resources such as Deployments and Services to manage how microservices are deployed and configured.

- Using a Deployment resource to specify how many microservice instances to run and the update process for those instances.

Deployment of microservices is initiated using the kubectl apply command, which sets up the necessary Pods and other resources. Service resources in Kubernetes are responsible for exposing microservices, managing their ports and protocols, and facilitating communication through service objects. This ensures that microservices can communicate with each other and with external clients effectively.

Networking configuration is essential for microservices that need to communicate with each other, often involving service meshes. Kubernetes employs service discovery using DNS to facilitate the identification of services, ensuring that applications can find healthy instances even when pods terminate and are replaced. Leveraging Kubernetes’ capabilities streamlines deployment processes, ensuring a scalable and resilient microservices architecture.

Scaling Microservices in Kubernetes

Kubernetes enables automatically scale applications based on demand, adjusting resources dynamically to optimize performance. Key aspects include:

- During low-demand periods, Kubernetes scales in by reducing the number of pods to conserve resources.

- Dynamic scaling is a powerful approach for addressing the increasing demands of modern applications.

- It effectively adapts to varying loads to ensure optimal performance.

The Horizontal Pod Autoscaler (HPA) dynamically adjusts the number of pods in a workload based on observed metrics such as CPU and memory usage. HPA utilizes a control loop that periodically checks resource utilization to determine if scaling actions are necessary. Kubernetes also allows scaling based on custom metrics, enabling tailored resource management specific to application needs. The vertical pod autoscaler can also be utilized for more advanced scaling scenarios, and it automatically scales to meet the demands of the application. Additionally, auto scaling can enhance the efficiency of resource management.

Vertical scaling in Kubernetes involves allocating additional resources to existing pods rather than increasing the number of pods. Kubernetes supports both horizontal and vertical scaling methods, providing flexibility in how you manage and scale your microservices. Leveraging these scaling capabilities ensures that your applications remain performant and cost-efficient.

Load Balancing and Service Discovery

Kubernetes services facilitate service discovery, allowing applications to find healthy instances, even when pods terminate and are replaced. This capability is crucial for the efficient intercommunication between microservices. Kubernetes employs DNS-based service discovery to ensure that your applications can locate and connect with each other seamlessly.

Load balancing is another critical aspect of managing microservices in Kubernetes. Load balance distributes traffic across multiple instances, ensuring high availability and optimal performance. This capability is essential for maintaining the reliability of your microservices architecture, especially during periods of high demand.

Implementing Fault Tolerance and Self-Healing

Kubernetes supports self-healing, which automatically restores the desired state of applications after failures. This self-healing capability includes automatically restarting or replacing failed containers to ensure application reliability. Kubernetes utilizes liveness and readiness probes to check the health of pods, ensuring that only functional instances serve traffic.

When a pod fails, Kubernetes can automatically restart it to maintain application availability. Key features include:

- ReplicaSets ensure that a specific number of pod replicas are running.

- ReplicaSets automatically replace any pods that fail.

- This auto-restart capability is crucial for maintaining service during failures.

Implementing fault tolerance measures in Kubernetes includes:

- Multi-master configurations in control planes to provide redundancy and prevent a single point of failure.

- Using anti-affinity rules to distribute pods across different nodes, enhancing fault tolerance during node failures.

- Employing replicated persistent volumes to ensure critical data remains accessible even if one node fails.

These measures are essential for building a robust foundation to implement microservices architecture.

Monitoring and Logging

Monitoring tools like Metrics Server can be employed to track the performance and health of microservices in Kubernetes. These tools provide valuable insights into resource usage patterns, CPU utilization, and memory usage, helping you optimize resource allocation and identify performance bottlenecks. Kubernetes logging architecture often requires a separate backend for log storage and analysis.

A node-level logging agent can be implemented as a DaemonSet to handle log collection across nodes. This ensures that logs from all application containers are collected and stored centrally. Key points include:

- Kubernetes supports the retrieval of logs from multiple streams, including standard output and error.

- When pods are evicted, their container logs are also removed unless managed separately.

- Log rotation is managed by the kubelet, which can be configured for size and file limits.

Effective monitoring and logging are crucial for diagnosing issues, tracking cluster activities, and ensuring the smooth operation of your microservices architecture.

Best Practices for Microservices Design

The Twelve-Factor App methodology focuses on principles that enhance the portability and scalability of applications across various environments. Each microservice should manage its dependencies explicitly to ensure isolation and ease of updates. Maintaining a single codebase tracked in a version control system is essential for managing multiple deployments effectively.

Microservices should be executed as stateless processes to maximize efficiency and scalability. Fast startup and graceful shutdown are critical for ensuring the robustness of individual microservices, allowing them to handle traffic spikes and shutdowns smoothly, following the microservices design pattern.

Following these best practices leads to the design of a scalable and resilient microservices architecture.

Security Considerations in Kubernetes

Disabling ‘always allow’ mode for RBAC, rotating API server credentials, and using Pod Security Admission are key foundational configurations in Kubernetes security. API tokens and service accounts in Kubernetes should be secured using JWT and expiration policies to enhance security. Implementing additional verification steps for sensitive operations can help protect against unauthorized changes.

Key aspects of Kubernetes security include:

- Automating RBAC policies with tools like Open Policy Agent to ensure consistent enforcement of security settings across environments.

- Using Kubernetes NetworkPolicies to define rules governing traffic flow between pods and external clients.

- Continuous monitoring to adapt access controls to new security threats in Kubernetes environments.

Using namespaces effectively can enhance Kubernetes security by isolating resources for different teams or projects. Integrating Kubernetes with external identity providers can streamline user authentication and improve security management. Following these security considerations ensures that your microservices architecture remains secure and resilient.

Accelerating Development Cycles

Kubernetes supports rolling updates, allowing software changes to be implemented without causing downtime. This capability enables smooth transitions to new versions of applications, ensuring continuous availability. Automating deployments in Kubernetes reduces manual errors and fosters consistent application setups across different environments, including automated rollouts and release updates.

Using CI/CD pipelines with Kubernetes automation can significantly streamline development workflows and minimize deployment delays. Microservices and Kubernetes enable faster release of updates and features, accelerating the overall development cycles.

The optimized inner application development loop speeds up the iteration process. It also enhances problem-solving in API development. Leveraging these capabilities enables development teams to accelerate development, reduce errors, and deliver high-quality software development applications more efficiently, resulting in cost savings.

Summary

In summary, mastering the art of building scalable microservices with Kubernetes involves understanding the fundamentals of microservices and Kubernetes, setting up your Kubernetes cluster, deploying and scaling microservices, and implementing fault tolerance, monitoring, and security measures. By following best practices and leveraging Kubernetes’ capabilities, you can build a resilient, efficient, and scalable microservices architecture.

As you continue your journey in the world of Kubernetes and microservices, remember that the key to success lies in continuous learning and adaptation. Embrace the power of Kubernetes, and unlock the full potential of your microservices architecture.